An unusual thing happened last week, and I’m trying to process it. I’m hoping writing about it will help me get some clarity around my thoughts and feelings.

For the second time on this blog, I have the unpleasant task of eulogizing a friend. An internet friend, but a friend nevertheless.

Early Thursday morning, on 22 May, there was a horrific plane crash in San Diego, California. All six aboard the plane were killed. Thankfully, despite crashing into a neighborhood of military housing, nobody on the ground lost their lives.

On board that jet was an internet friend of mine, Daniel Williams.

I didn’t know Daniel super well, but we knew each other. To my recollection, we met only once in person, and if my [admittedly awful] memory serves, it was whilst waiting in line to enter one of the live tapings of The Talk Show at WWDC. At that point, we knew each other a smidge, but mostly only because Daniel very kindly facilitated getting me a discount on a GoPro.

Daniel had spent the last eight-ish years as a senior software architect at GoPro, working on their iOS app. He had actually just accepted a dream job offer at Apple, working in the health organization, and was due to start there on this coming Monday.

Over the last year or two we got closer, though I’m not trying to say that we were close. We often exchanged DMs on Instagram and occasionally chatted via iMessage. I was super excited for him to start at Apple soon, though clearly not as excited as he was. 😊

On Wednesday night, the 21st, Daniel was in New York, having just seen a band he knew play. Daniel’s “former life” was as the founding drummer of the metal band The Devil Wears Prada. He had this to say after I asked him about the show:

Sooooo good!

Their show was insane… it is so crazy to see them go from playing a Chinese restaurant (I was there at their first show lol) with 12 people in it to [Madison Square Garden] with like 20k people. They are such good dudes, they deserve it for sure.

Again, I’m not trying to claim I knew Daniel well. But from what I did know, this is very much him: happy in general, but especially to see others happy and successful.

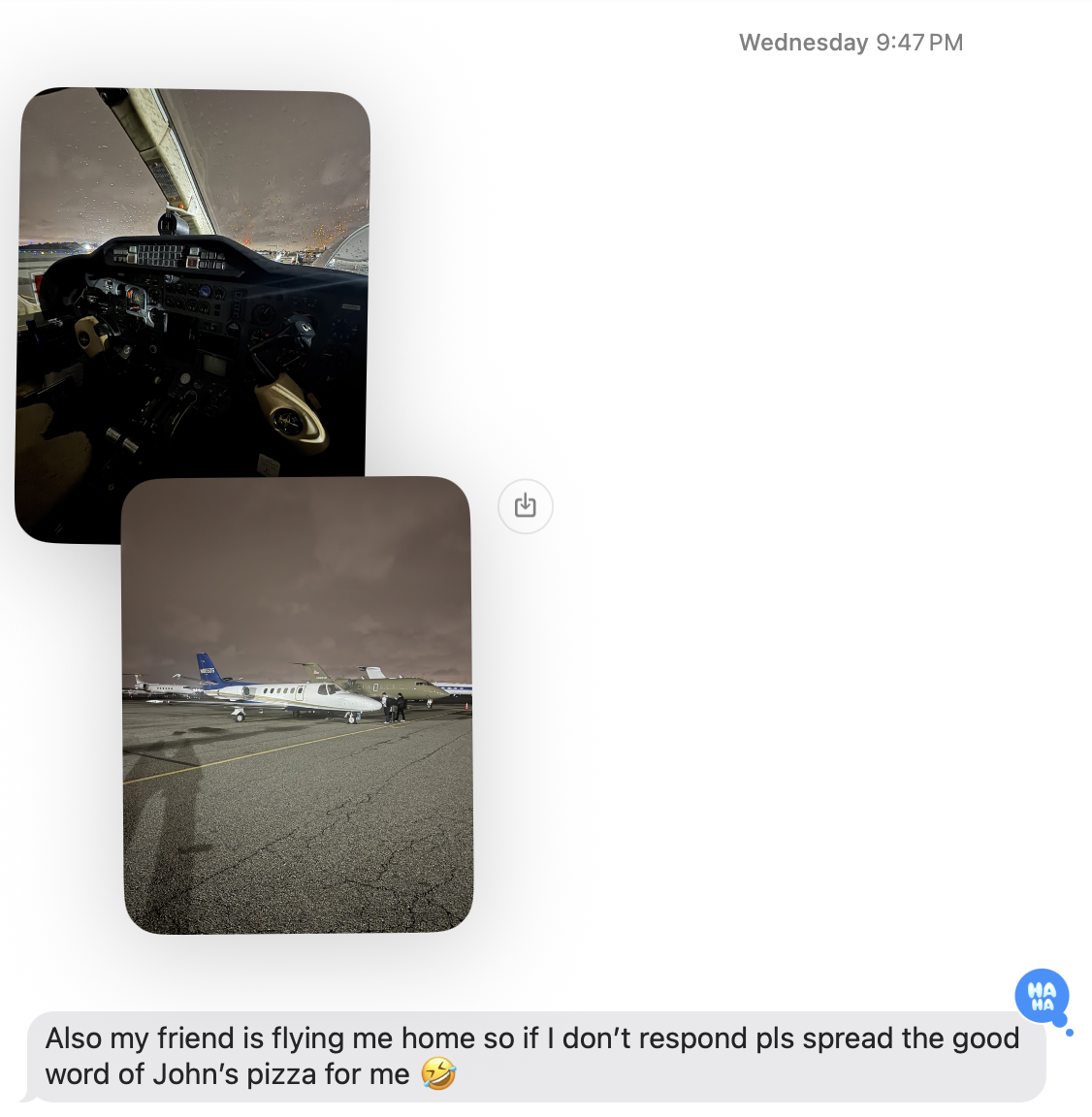

Daniel sent that, Wednesday night, after sending pictures of him eating at my favorite pizzeria in the world. He went on my suggestion, and was kind enough to send me these three photos, which made me deeply jealous 🤤:

Looking back on these photos now, I can’t help but wonder if they’re photos of his last meal. 😢

Three hours later, he had this to say:

His last message to me just hits different now:

💔

On Friday night — two days after we last exchanged messages, and the day

after Daniel’s tragic accident — I was looking at Facebook, and saw his

image in a news story that somehow landed on my timeline. Then I read the

headline, and the two words jumped out at me: Plane Crash. I had no idea.

I was — and remain — shocked.

The last time I wrote a post like this, it was also about an internet friend. I wrote then:

Jason was like nobody I’ve ever met. Which is an odd thing to say, since we had never actually met in person. Nevertheless, I immediately started to cry upon hearing the news; an odd thing to do for someone who, on paper, was just a voice in my head.

Though Daniel and I did meet the one time, the feelings are all the same. Though this time, they’re complicated by me chatting with Daniel hours before he passed away.

From what I’ve read, the crash was the confluence of a ton of weird events: truly terrible weather, equipment malfunctions, etc. The pilot, Dave, was by all accounts an exceptionally skilled pilot. Sometimes, though, everything conspires against you.

Life is a magical, amazing thing, that can be snatched from you in an instant. You never know when — nor how — your ticket will be punched.

Do yourself a favor and hug your special people close today. From everything I know, Daniel would want you to.

Rest in peace, friend. 💙

School is wrapping up this week here in Richmond, and as such, I’m deeply into Maycember. Thus, it completely slipped my mind to post about my most recent guest appearance.

Last week, I joined my friend and co-conspirator, Ben Rice McCarthy; as well as my internet pal Brian Hamilton on their The Last of Us recap podcast, The Cast of Us.

On the episode, we covered The Price. Both Brian and Ben had played the video games, but I have not, so it was an especially fascinating conversation for me, covering some of the differences between the two.

The Last of Us is a show that I deeply enjoy, despite not being my usual kind of television programming. I tend toward easy watches, which mostly just wash over me. The Last of Us is not that kind of show, and though it’s challenging, it’s also deeply rewarding.

I really enjoyed the conversation I had with Ben and Brian; if you’re watching The Last of Us, I think you will too.

And as a bonus, the next episode features my co-host, John Siracusa!

Callsheet 2025.4 is currently rolling out in the App Store. If you don’t have

it yet, you can open the app in the Store and that should allow you to

Update manually.

So what’s new?

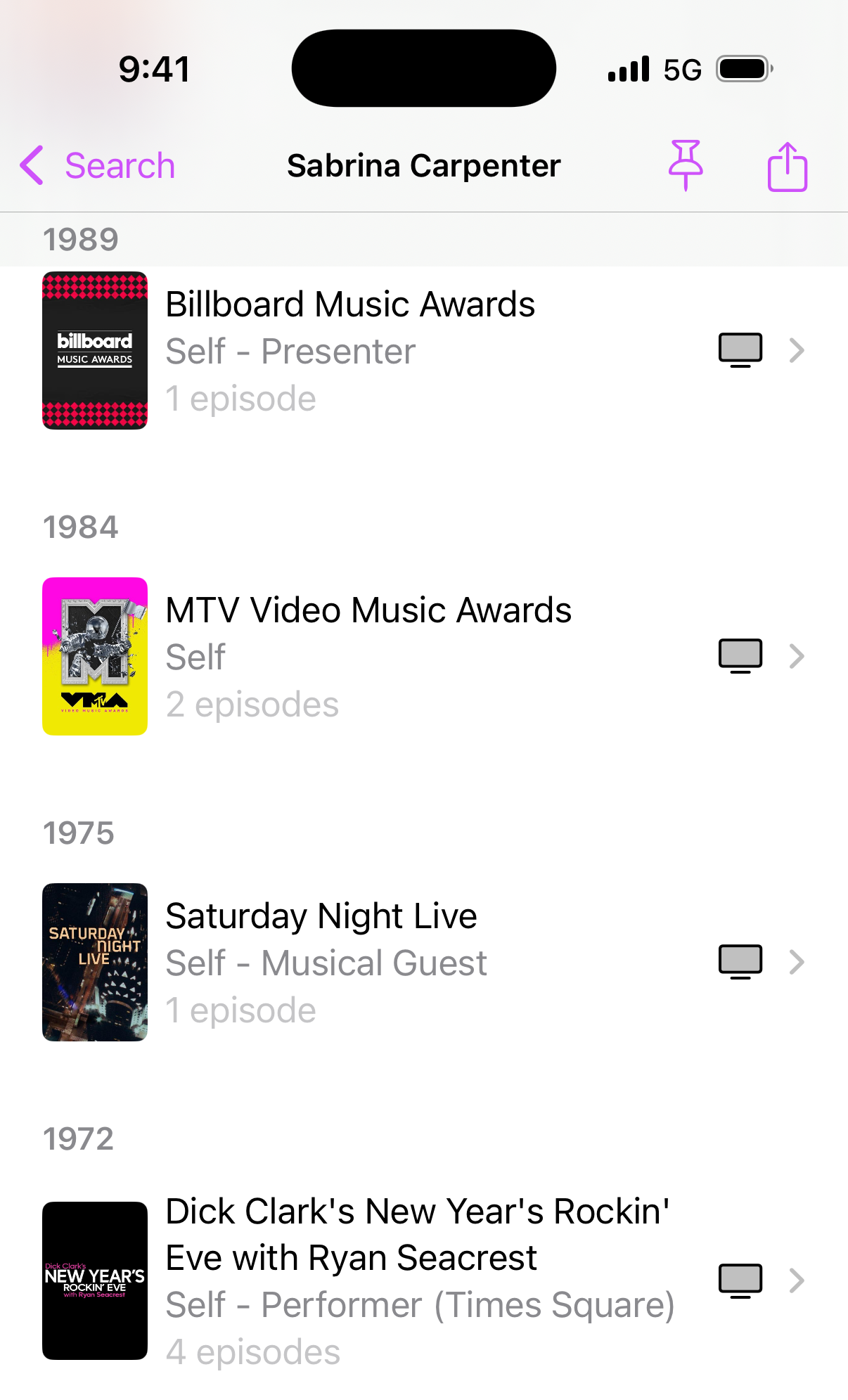

TV Guest Appearance Dates

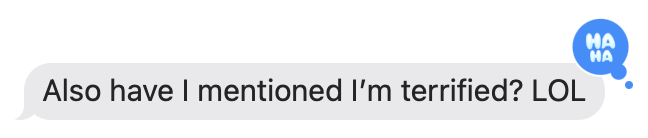

Users, understandably, have quibbles and feature requests about Callsheet. However, one complaint far-and-away stood out as the most common. It went something like this:

Why is it when I look at Sabrina Carpenter, her appearance on Saturday Night Live is listed… before she was born… back when the show started in 1975?

After a long time, in version 2025.2, I added a hack to help

alleviate this problem: the Long-Running Series collapsible

section on a person’s filmography. I was never happy with this, but

at least it prevented pre-birth appearances.

To properly slot the appearance into the correct year “bucket” would require a zillion calls to The Movie Database’s servers, and thus make Callsheet itself feel quite a bit slower. That juice wasn’t worth the squeeze.

Now, thankfully, after much pleading, the fine folks at TMDB have finally delivered. Thanks to a change on the API side, the information I need is now available, and I can slot a person’s first appearance in a show into the correct year.

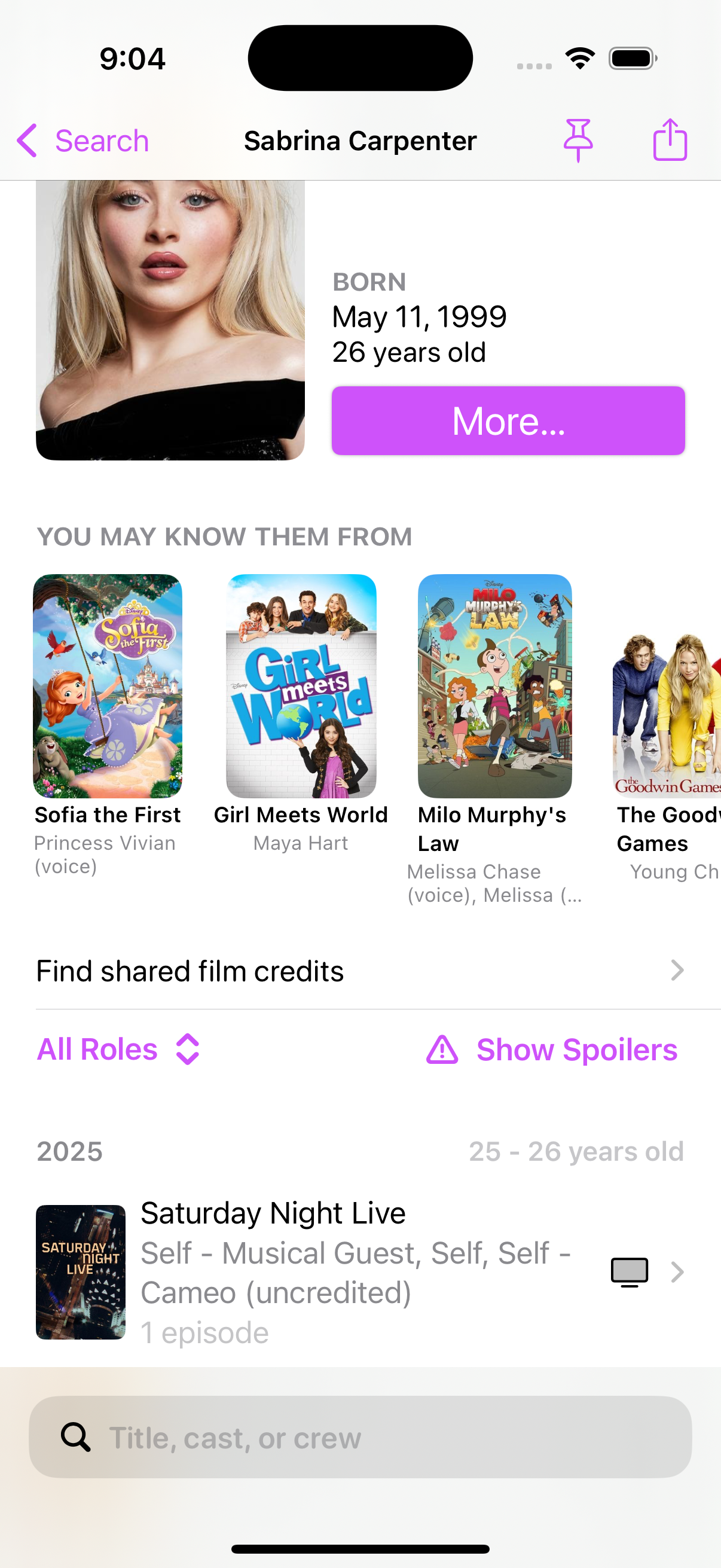

More Ages in Cast/Crew Lists

One of my favorite bug reports to get is when someone writes in to say something like:

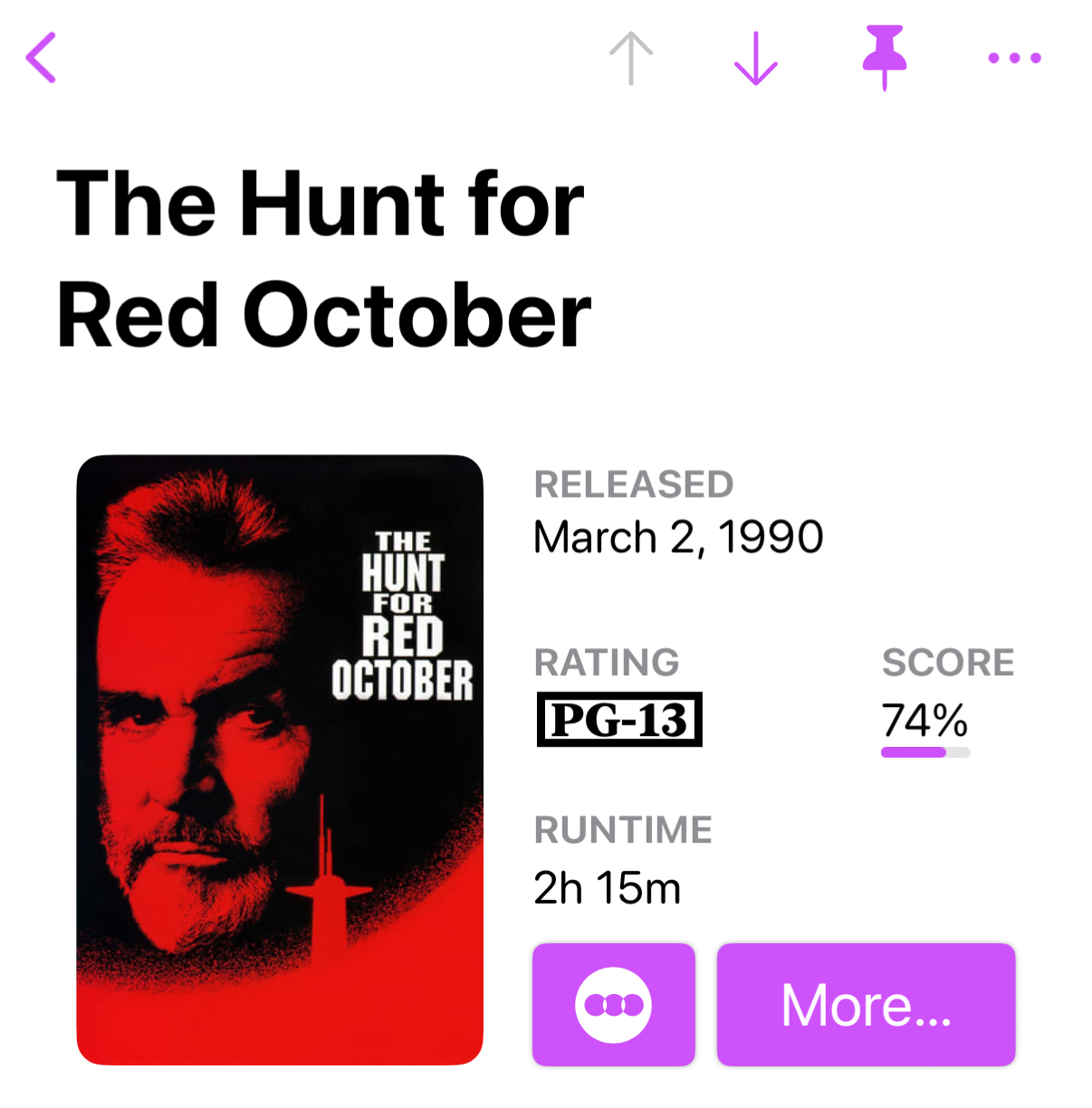

Alec Baldwin is listed as 31 years old in The Hunt for Red October, but he’s quite a bit older now.

When I respond to say “that’s how old he was when the movie was released”, the inevitable “🤯” I get in return is always delightful.

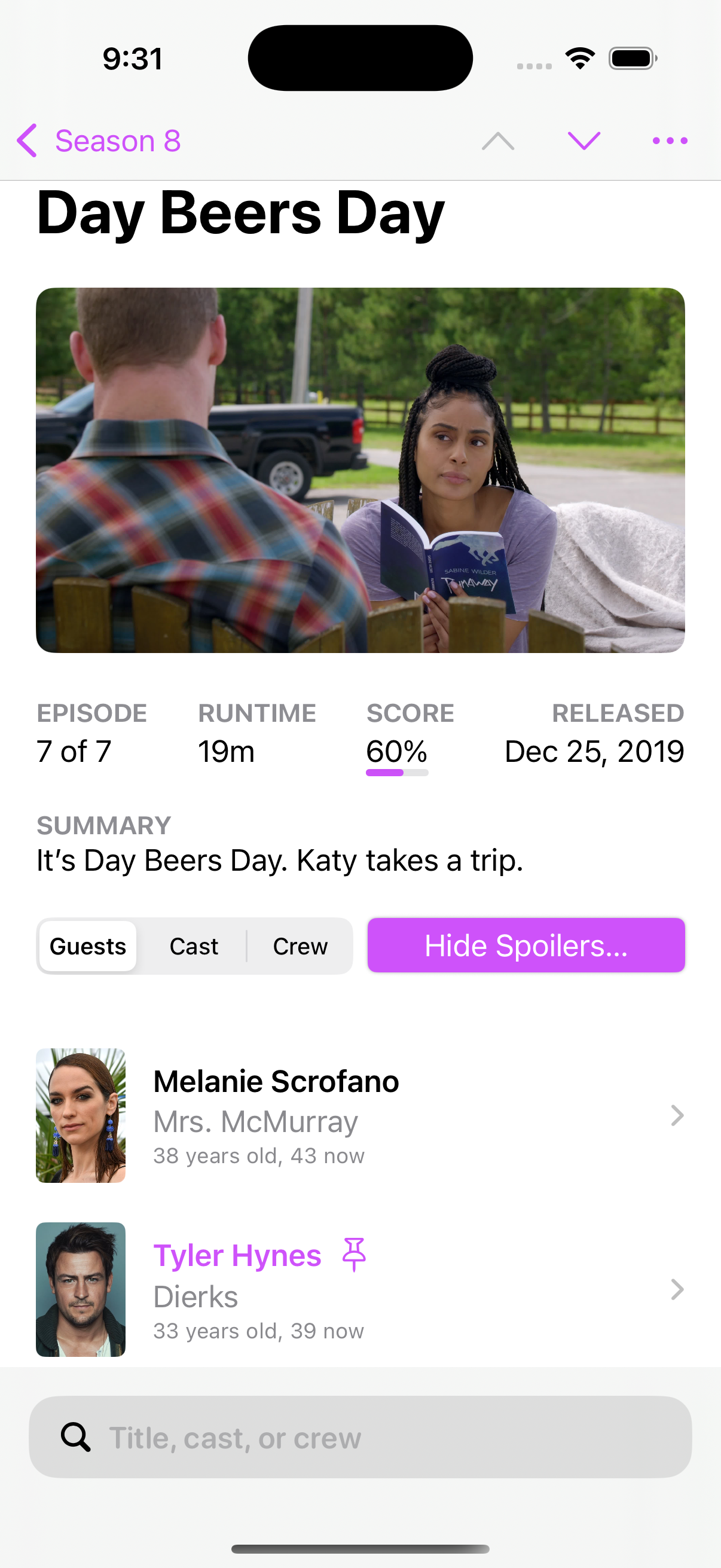

However, it should be clearer, and now it is:

Highlights for Pinned People

As you’re browsing around Callsheet, it should be smart enough to call attention to people that you’ve previously pinned. Now, Callsheet will do exactly that:

Beginnings (?) of Letterboxd Integration

I’m making 0️⃣ promises, but on the long-term roadmap is to integrate with Letterboxd. As a stop-gap, I’ve added Letterboxd as an optional Quick Link. Thus, you can also make this your Quick Access Link, if you so choose:

Please note that this link will only show for movies, and not TV shows nor people.

Select Available Links

With the addition of Letterboxd as a Quick Link, the list of possible links is

getting quite long. In the in-app Settings, you can now toggle links on or off.

Note that the Quick Access Link cannot be toggled off, until you switch the Quick

Access Link to a different link.

(There are a couple of minor visual glitches in the selection screen that will be fixed in the next version.)

Quick Hits & Bug Fixes

- Credit counts are now shown at the very bottom of a person’s filmography

- On macOS, tool tips have been added for Quick Links and the toolbar

- You can now paste TMDB or IMDB URLs into the Search box; once you hit

search, Callsheet will show the correct item - Fixed behavior for TV shows with non-contiguous season numbers

- Fixed share links from context menus not using universal links

- People’s “Known For” should now more heavily weight shows and movies you’ve pinned

- Actor heights are now back, where possible

- Searches will trim whitespace by default

- Fix a weird visual glitch where the fourth (and only fourth 🤨) person in a cast/crew list would have no image

- Fix visual glitch with the “Released” for TV episodes

- Now requires macOS 15.0

- German localization fixes

I tend to feel… strongly. That applies to most aspects in life, including when I find a company whose product(s) I really enjoy.

Those products tend to share common traits; they are often simple on the surface, but have surprising depth to them. They can mold themselves to fit my needs, but once that mold is set, they get out of my way. But more than anything else, they tend to be reliable. I fell in love with Apple because, back in the mid aughts, their products really did just work.

I think my appreciation for a product crosses the rubicon into love when it regularly and repeatedly demonstrates one trait: respect for the user.

Even an unreliable product can keep my trust, enthusiasm, and love, if I can tell that those who make it have a deep respect for me.

Many years ago, Eero sponsored my podcast. They were kind enough to send a three-pack of base stations, and I have not looked back. Eero was simple to use, but powerful enough for the needs of a superdork like myself. It largely remains so to this day.

However, a few years back, Eero fell victim to every corporation’s favorite thing: recurring revenue. Eero started quietly pushing Eero Plus, a subscription service that I was largely uninterested in.

Over the years, this has gotten more and more aggressive, and, annoyingly, more and more has been gated behind Eero Plus. I love that I can see totals for downloaded and uploaded data in the app, but every time I want to dig deeper, I see this upsell, and I grumble.

This is not respect for the user. This is enshittification. It’s a foot gun.

As I write these words, I still use Eero in my house. I still recommend it to most people. But instead of an unequivocal, no-caveats “get this”, my advice is now “get this if you’re not a power user”.

I plan to replace my Eero setup with Ubiquiti equipment later this year.

Sonos is the classic example of “once you experience it, there’s no going back”. I was able to buy some Sonos equipment at a steep discount, and I was woefully unsure if I had just wasted a still-considerable amount of my money.

Within the first 48 hours, I was a Sonos superfan, and started evangelizing their products to anyone who would listen. All it takes is walking a portable speaker from one end of the top story of my house, past the music playing in my office, past the music playing in the living room, past the music playing in the porch, to the backyard. Somehow — by ✨ magic ✨ — the music was perfectly synchronized the whole way. The speaker in my hands was perfectly synchronized with the office, the living room, and the porch. Incredible.

While that still remains true today, the Sonos app has been an utter disaster. Around this time last year, Sonos put their own needs over their users’, and foisted a completely-rewritten app upon their entire userbase. This app was (and remains!) deeply maligned. At this point, a year later, it’s usable, and I generally don’t have problems with it.

What was once an unequivocal recommendation to friends and family — “You should get a Sonos system; just tell me how much you want to spend” — has now become “well, I still recommend a Sonos system, but there’s some things you should know…”.

It didn’t have to be this way. This is not respect for the user. While I wouldn’t go so far as to say this is enshittification, I will say it’s 100% a footgun.

Indiana Lang said it extremely well:

We need systems that prioritize stability over flashy new interfaces, and functionality over form.

This week, the chickens that have been wandering around have come home to roost. Synology, of whom I’m such a fan that every time I bring them up on ATP, Marco hits a vibraslap for both humor and emphasis.

Synology were kind enough to send me a filled, 8-bay network attached storage device back in 2013. Within a couple months I was in love. Having infinite storage available really changes how you think about your digital life. While a Synology is not for most people, it’s 10000% for people like me. I would not and did not stop talking about them. I’ve personally sold countless number of Synology units by my enthusiasm alone.

This week, things finally changed, officially. Synology will be restricting features for those who do not use Synology-branded drives. Drives that are bog-standard enterprise hard drives, possibly with some custom firmware in them.

Why? For more revenue. Footgun.

I’m not sure if I was more of a fan of Synology or Sonos, but suffice it to say, I was a superfan of both. I just replaced my original Synology last year, and I’m sad to say that the one I just got is likely to be my last. While I love having a place to store files, run Docker containers, and handle Dropbox, I don’t want to be on this ride. I don’t want to reward their greed. I’m out.

Whenever this Synology dies, I’ll be replacing it with a UNAS Pro.

Synology have turned off so many of their most ardent evangelists. Just so they can sell some overpriced hard drives.

It doesn’t have to be this way, and we saw that earlier this year from Apple, of all people. The kings of margins; the kings of SeRvIcEs ReVeNuE.

Up until the recent release of the M4-powered MacBook Air, a simple question always had a difficult answer: “What computer should I buy?”.

The answer depended on what you were doing with it, how much you wanted to spend, how long you wanted to keep it, and more. Maybe it’s a MacBook Air. Maybe a MacBook Pro. And even if it is an Air, you should definitely upgrade the RAM, and possibly the SSD.

It was complicated.

Now, as of the $999 M4 MacBook Air? “Buy a M4 MacBook Air. Upgrade the RAM or SSD, in that order, if you have the means. But it’s okay if you don’t.”

Done.

The opposite of a footgun. A problem solved. Solved by respect for the customer.

Capitalism sucks, but it’s the system we have, and I don’t know a better one. But as a small-time capitalist myself, I keep wondering why Callsheet has been so well-received in the market. A lot of it is luck, but I think it boils down to two simple facts:

- IMDB does not respect its users, which it demonstrates in new and fantastic ways every time you use it

- Callsheet does.

I endeavor to keep it that way, for as long as y’all will let me.

I don’t recall when, exactly, I started using Dropbox. However, I apparently earned a bonus 250 MB of space by completing the “Getting Started” flow back in September of 2009. So, it’s been a minute.

At first, Dropbox was a revelation. It was the first time something synchronized with the cloud, and did so reliably. Their client apps were svelte, optimized, and worked a treat.

Over the years — mostly but not entirely because of their own choices — their client apps have become gross, bloated, overcomplicated messes.

I still rely on Dropbox to collaborate with my coworkers. However, I haven’t run one of their client apps on my computers in around five years. How?

My Synology takes care of it for me. If you happen to have a Synology NAS, you, too, can live in this eden.

The path forward is a combination of Synology Drive and Synology Cloud Sync. In short, Drive acts as a faux-Dropbox, and allows you to sync files between your devices via your own Synology. Cloud Sync then synchronizes between your Dropbox and your Drive.

- Install Synology Drive Server on your Synology

- Configure it… however it needs to be configured. Honestly, I haven’t done this for a decade, so, uh, Godspeed.

- Install a Synology Drive client on your computer and verify that files are being synchronized between your computer and Synology.

- Install Synology Cloud Sync on your Synology

- Click the ➕ button to add a new cloud provider

- Select Dropbox

- Go through the authentication flow. While you do this, the key is to set your Dropbox sync folder to be within your Drive. This way, the place that Dropbox is synced to is synced to your computer via Drive

- Delete the Dropbox client from your computer and rejoice

So, for me, my Synology’s filesystem looks like this:

~casey

+-- ...

+-- Drive

| +-- ...

| +-- Dropbox

| | `-- (My entire Dropbox is here)

| `-- ...

`-- ...

On my Mac, it looks basically the same.

So, if I were to create a new file on my Mac, and save it as

~/Drive/Dropbox/some-text-file.txt

The following will happen:

- The Synology Drive client on my Mac will see the new file

- That file will be uploaded to the Synology

- Cloud Sync (running on the Synology) will see the new file

within the synchronized

Dropboxdirectory - Cloud Sync will upload that file to Dropbox

Thus, some-text-file.txt ends up in my Dropbox, and all I had to do

was save the file on my local SSD and trust my synchronization scaffolding

to take care of it.

I will say that the Synology Drive client for macOS is… not great. It’s clearly a cross-platform app, though I believe it’s using Java or some other sort of technology, rather than Electron. It’s ugly, but it works, and unlike the Dropbox client apps, it stays out of the way.

I can’t recommend this setup enough, and if you have the means Synology, I

highly suggest you pick one up give it a chance.

I was pleasantly surprised — moments before sitting down to record Clockwise — that Devon Dundee over at MacStories had done a write-up of the aforementioned Callsheet 2025.2. Specifically, Devon spent a lot of time with the multiple lists of pins headline feature.

Outside of the publicity, this write-up was particularly gratifying for me, as Devon absolutely took notice of some of the power-user affordances I included. I thought long and hard about how to appease both novice and power users alike; it was so great to hear Devon pick up on all of these choices.

This includes both the progressive disclosure of multiple lists[1], as well as the surprising depth of the pin toolbar icon[2].

One of the ways I stay positive about my work is by working hard. I try very hard to produce work I’m proud of, and that will delight my users. To be able to read about how Devon was delighted by a very large effort that took a lot of thought was such a gift. 🥹

If you haven’t given Callsheet a try recently, you really should. It’s really coming into its own. 😊

Namely, the switch from

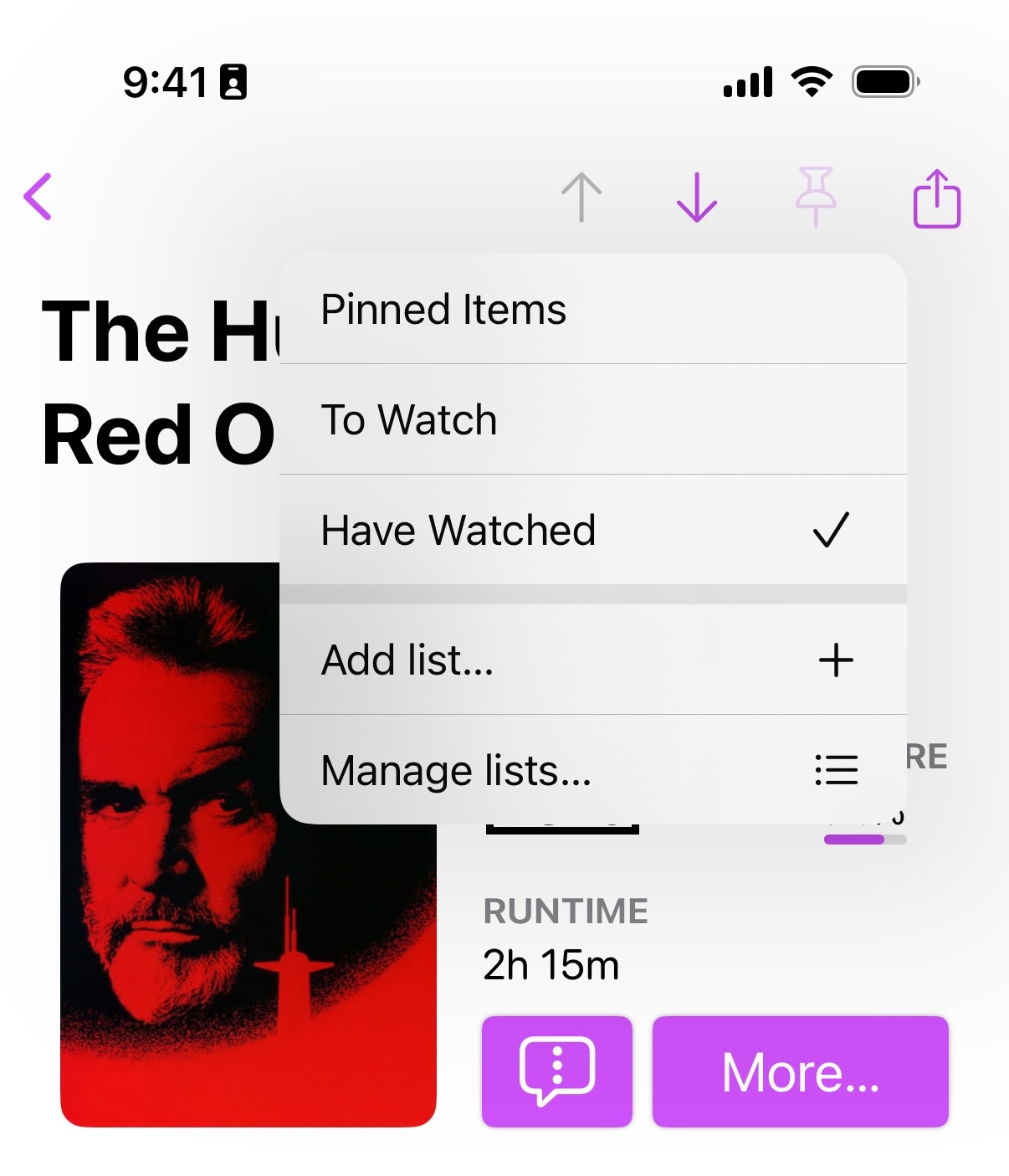

Add list…toSwitch liston the Discover screen. ↩If you tap on the pin, the current item will be added or removed from the active list, as appropriate. If you tap-and-hold, a menu will be presented, which will show all lists, and whether or not the current item is a member of any of them. You can quickly add or remove from this menu. Finally, the pin will be pinstriped if the current item is pinned in a non-active list. ↩

Yesterday I joined Dan, Micah, and Simone de Rochefort on Clockwise.

On this episode, we discussed what we carry in our wallets, whether or not buying a phone made by a heavy equipment manufacturer is a wise choice, how we feel about the new Pebble, and how my co-hosts are all wrong about cellular MacBooks.

Upgrade is my favorite tech podcast. I enjoy it thoroughly, every week.

This week, I was honored and lucky to fill in for Myke and join Jason on the show. It’s a lot of pressure to join one of your favorite shows; not only did I not want to let Jason and Myke down, but I didn’t want to ruin a great show!

Thankfully, I’m really happy with how the episode turned out. Jason and I spoke about all sorts of things. Naturally, a lot of Apple news, but I was happy to sneak in some of my latest obsession — home automation — as well as an update on Callsheet.

Jason and Myke typically film the show and upload to YouTube; Jason and I did the same. You can find the episode there:

If you’re not a regular Upgrade listener, shame on you, but there’s no better time to start than now.

I’d also like to take a moment to wish a hearty CONGRATULATIONS to Myke and Adina Hurley! Myke needed me to sub for him on Upgrade for a very good reason:

Welcome to the world, Sophia. Your parents worked hard to get you here, and I’m very excited to meet you.

Since shortly after Callsheet was released, my #1 request from my users has been the same thing: pins are great, but can we have multiple lists of pins?

Today is the day.

Over the last few days, Callsheet 2025.2[1] has been quietly rolling out to users. While there is a lot in this release, the headline feature is the ability to make multiple lists of pins. If you have Callsheet installed, and it hasn’t updated, you can open it in the App Store and force your device to update it.

Multiple Pins

As noted above, a clear improvement to Callsheet would be for it to support multiple lists of pins. Many users asked for some variation of three lists:

- Watching

- Have Watched (or, perhaps, Favorites)

- To Watch

The headline feature of 2025.2 is exactly this: Callsheet now allows for more than one list

of pinned items. Additionally, the Pinned Items list can now be renamed, though it cannot

be deleted.

As I was designing this feature, I wanted it to be completely ignorable if multiple lists just aren’t a user’s cup of tea. Furthermore, in order to keep the user interface straightforward, I wanted to avoid asking users “which list are you talking about?” every time a pin is added or removed. However, I wanted to make it possible for power users to quickly add or remove an item from any of their lists.

Callsheet now has a concept of an Active List, which is to say, the default list that pins will be shown, added to, or removed from. If you open an item in the app, and you tap the outline of the pin in the toolbar, the Active List is the list that will have a new pin in it. (This is the same way things have always worked in Callsheet; except that there could only ever be one list.)

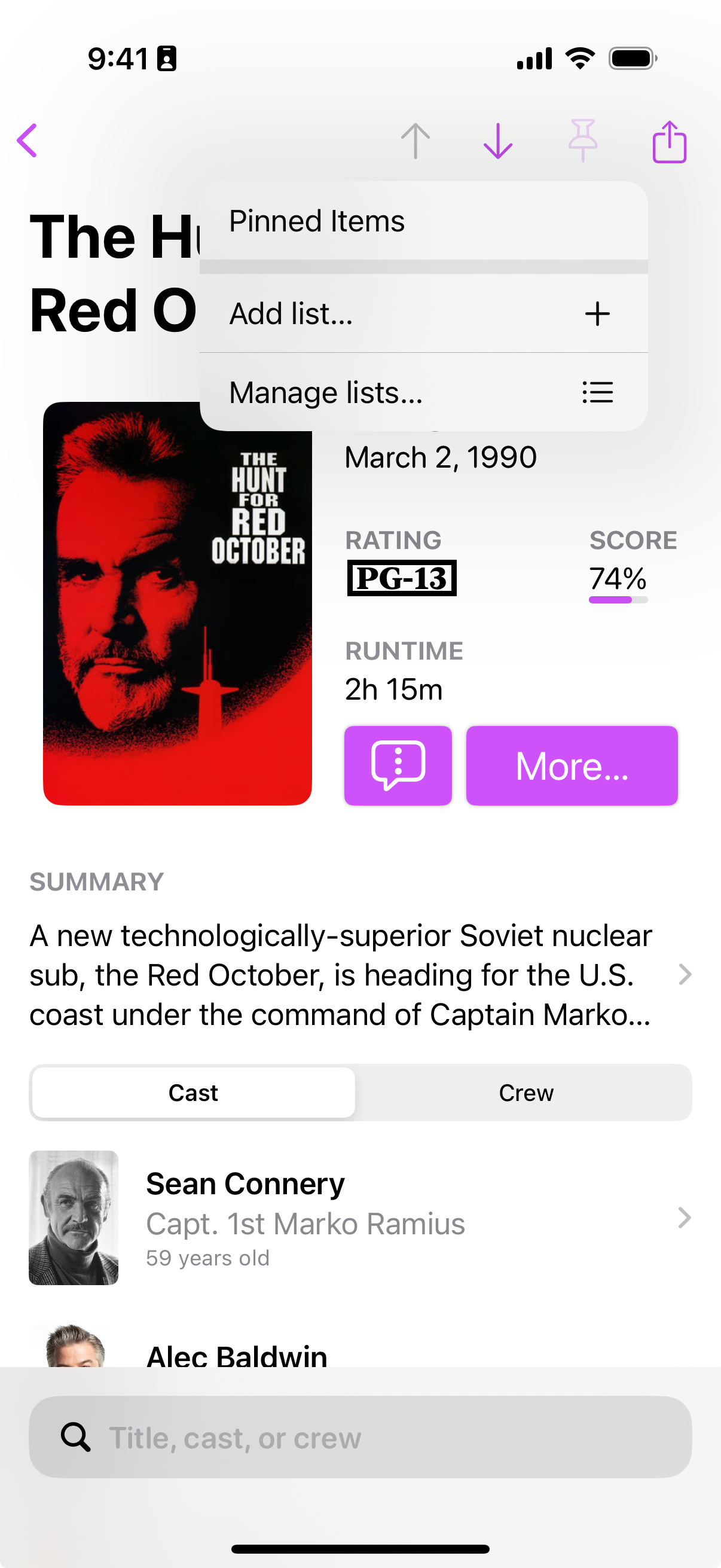

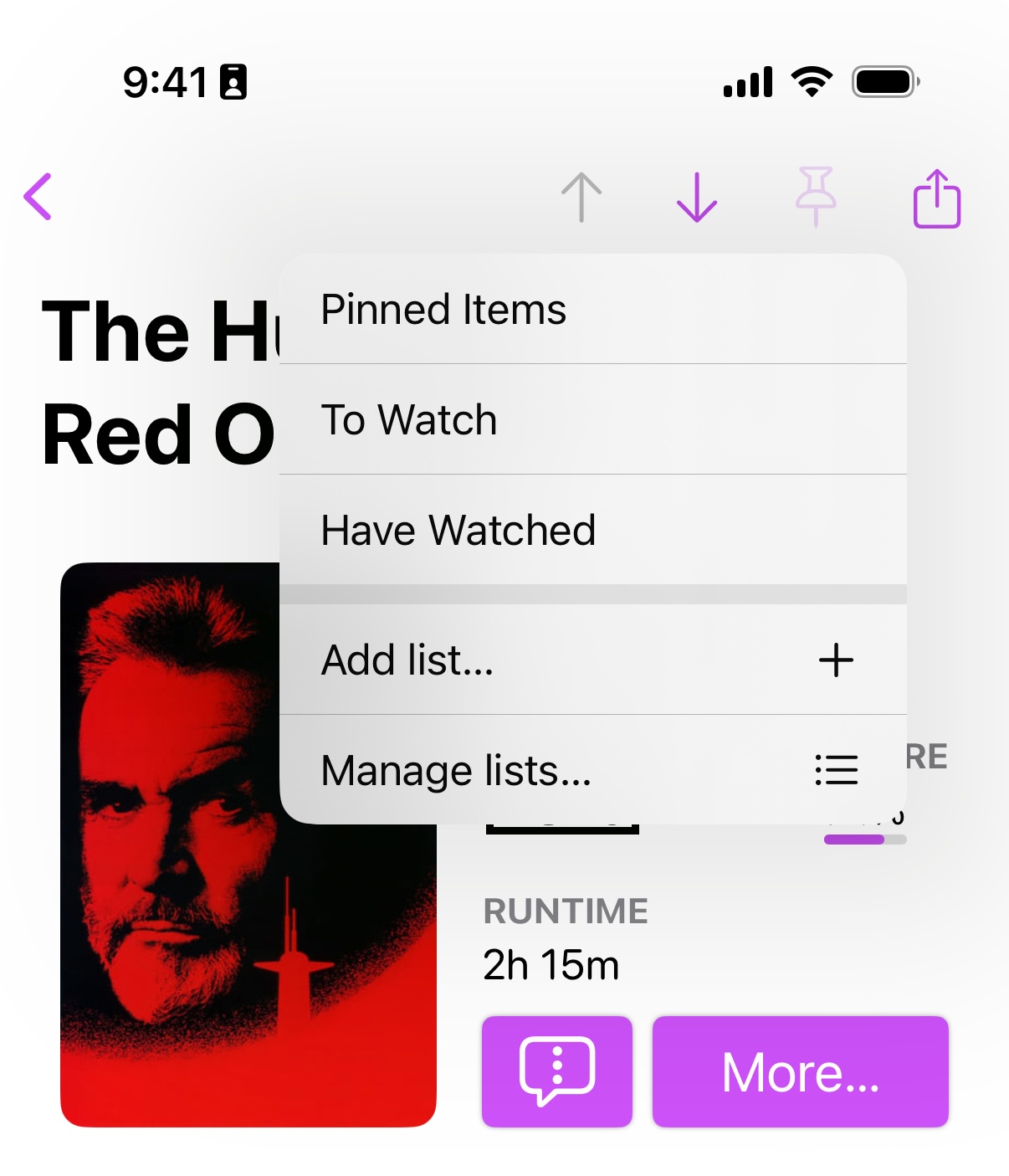

However, if you tap and hold on the pin icon in the toolbar, you’ll be presented with a new pop-up menu:

Right now, this menu isn’t very interesting, as it only has the default Pinned Items list in it.

Since Pinned Items is the active (and, as it turns out, only) list, if I were to tap on that menu

item, it does the same thing as tapping the pin icon. Hunt would be added to the active list,

which is Pinned Items.

Once you add a few lists, that menu gets more interesting:

If I were to select Have Watched, then The Hunt for Red October would be added to that list.

This would be shown as a ✔️ on that menu item.

Now that Hunt is in the Have Watched list, to indicate that this item is pinned

on an inactive list, the pin icon is striped:

To switch the Active List, return to the Discover screen (the first screen

you see when the app opens). On the right-hand side, you’ll see a link/button

that either reads Add list… (if you only have one list) or Switch list

buttons. Tapping Switch list will change what the Active List is. Additionally,

you can see the items in the Active List by tapping the list name, just like

Callsheet has always worked.

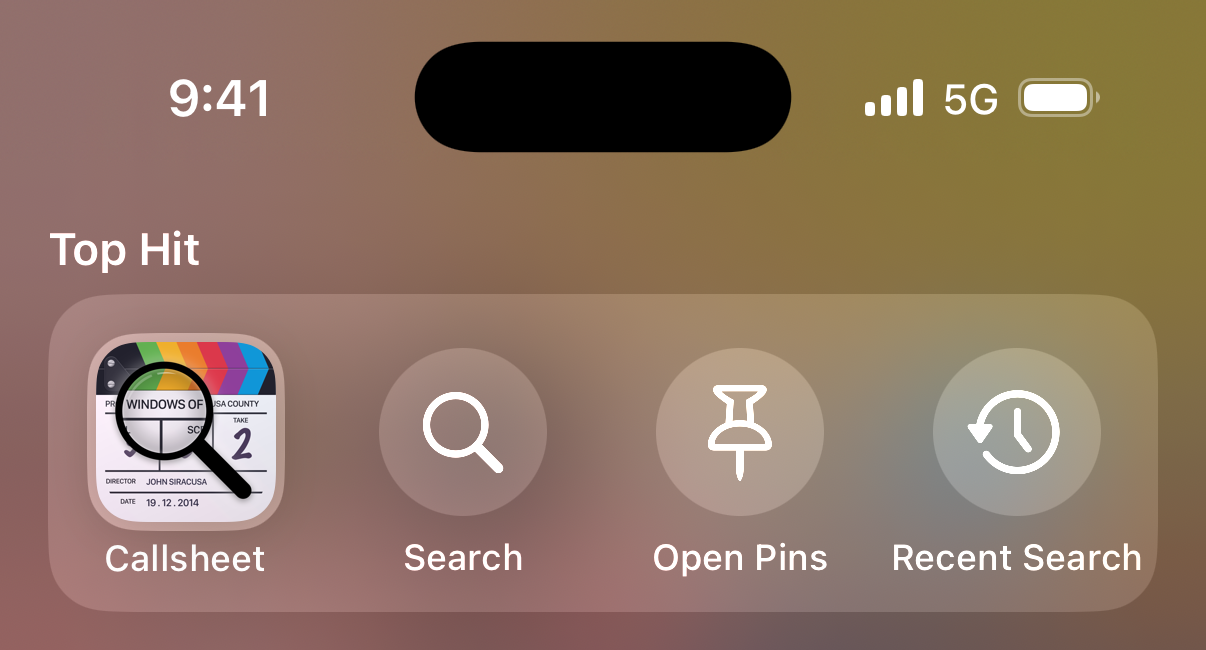

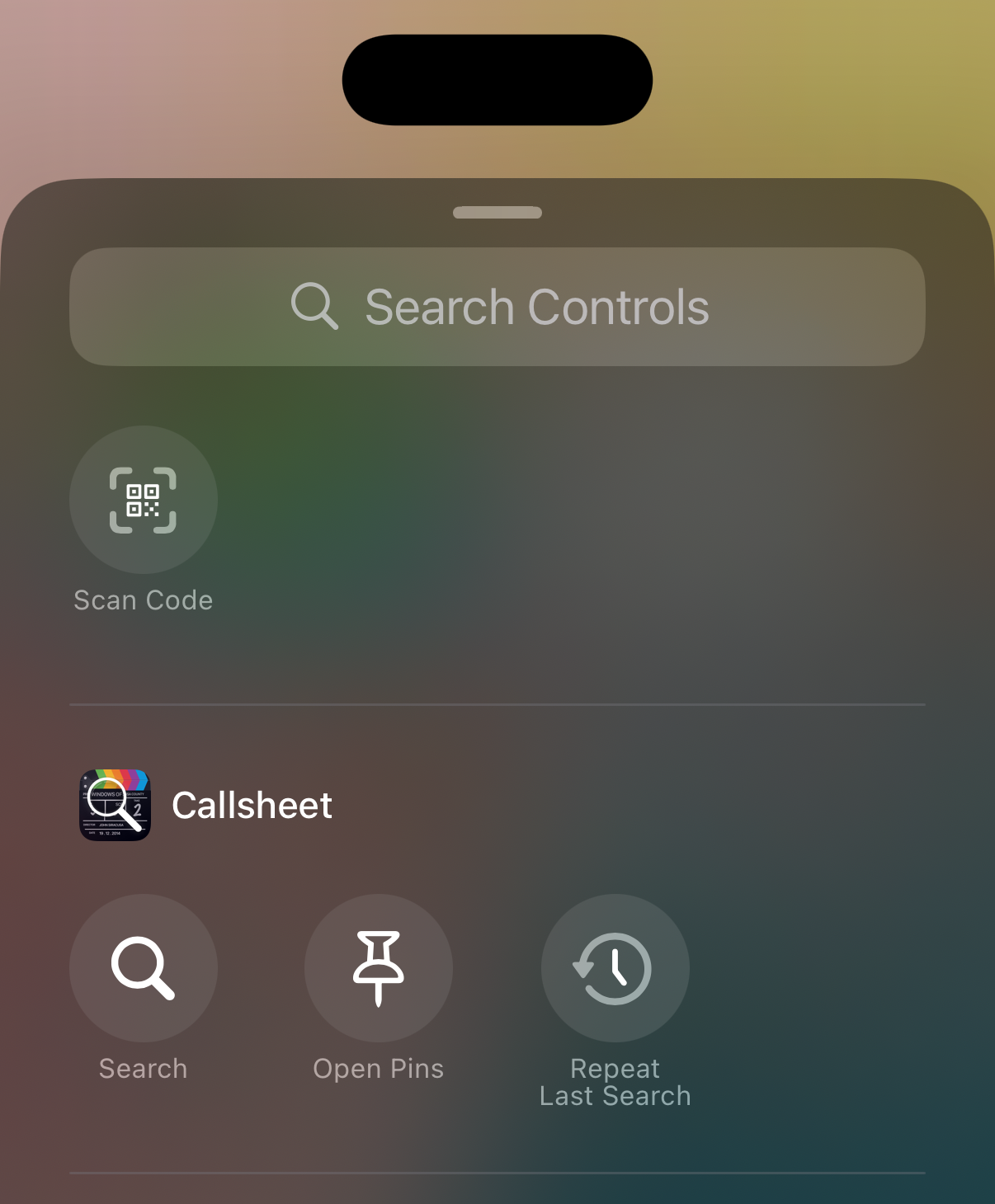

Spotlight, Control Center, and Shortcuts

I’ve also begun adding App Intents to the app, which in non-nerd means “you start to see Callsheet showing up in more places within your phone now”. This includes Spotlight:

As well as Shortcuts:

And Control Center:

Better Treatment for Long-Running Series

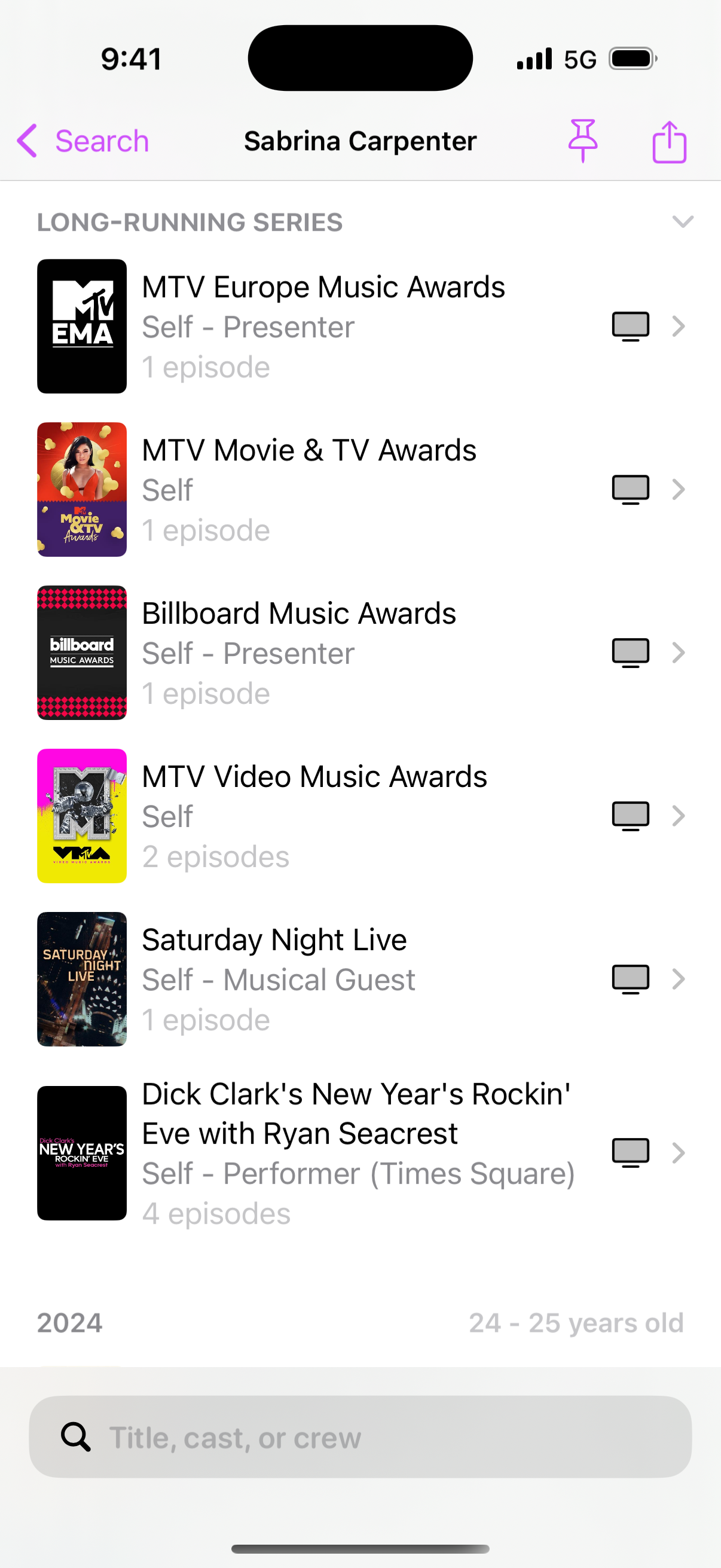

An extremely common — and fair! — complaint about Callsheet is the way I handle long-running series. Take, for example, Sabrina Carpenter, who was born in 1999. She has made appearances on shows like Saturday Night Live, but, well, after 1999. Due to a quirk in the way TMDB works, the way the information about her appearance on SNL is returned to me is based on the year that Saturday Night Live first aired. Which was 1975. Almost 25 years before Sabrina was born.

Coming up with a fix for this quirk is more challenging than you’d think — to properly fix it would require making a lot of requests to the TMDB servers. While each request is very fast, in aggregate, it would make Callsheet seem far slower. I don’t think perfect accuracy in a person’s filmography is worth slowing down the app.

Instead, I’ve tried to thread the needle by doing my best to group shows like these

behind a new, collapsed, Long-Running Series section on a person’s filmography. Here

you can see Sabrina’s filmography in version 2025.2; this is after I expanded the

Long-Running Series section:

Note this is one of the first sections on a person’s filmography; it is slotted

between Upcoming releases and the current year’s releases.

iPad/macOS Support for ⌘F

Good news: iPads with keyboards attached, as well as Macs, can now hit ⌘F to focus the search field.

Bad news: It’s a disgusting hack, and there’s nothing I can do about it. If anyone at Apple is reading this, FB16385764.

Automatic Dark Mode Icon Switchover

For the OG icon set — the clapperboard with magnifying glass — those icons now know what their dark variants are. For users that switch between light and dark mode, the OG icon set will automatically switch between light and dark variants.

Apologies for this taking so long, but to be completely honest, I hate the way my home screen looks with dark icons, so this wasn’t a high priority.

Other Quick Hits

There’s also a handful of other changes in this release:

- Users can now “roll the dice” to select a random entry from a list:

- Tap the list’s name on the

Discover(main) screen - Tap the dice button on the right-hand side

- Tap the list’s name on the

- Users can now un-hide the spoilers in the

Known Forsection when viewing a person. - There is a new Pretty in Pink version of the OG icon.

- I’ve started to slowly embrace TipKit, which means occasionally users will see a popover with hints on how to make the most of Callsheet.

- Some small VoiceOver fixes

- Fix an oddball case where a movie/show title with a question mark in it would lead to Callsheet getting confused when opening Wikipedia

- Fix a plurality mismatch in the pre-sale screen

- Started to work toward adopting Swift 6 Strict Concurrency… maybe one day I’ll actually finish that effort 😵💫

Briefly, there was a 2025.1, but early users reported a critical issue with it, so I stopped the roll-out pretty quickly. If you’re one of the [un]lucky few who got it, you may have seen two copies of the

Pinned Itemslist on your device. You may have also noticed that neither of them could be deleted. Whoops. 🤦🏻♂️

2025.2 [hopefully] prevents this from happening, and provides an automated fix if it has happened. ↩

Ten years ago today, I wrote this post.

As many of my posts do, it used many words to say one simple thing:

In the end, all I can say is that I’m so deeply, deeply lucky to have found you, Erin.

Twenty years ago today, Erin and I decided to become boyfriend and girlfriend. I would tell you that I asked her; she would tell you she asked me. Given my horrible memory, she’s surely right, but I kinda like that a definitive answer is lost to time. I’ve retconned it to be that we just made the decision together.

Together. Which we’ve been — as of today — for twenty years.

Erin said to me recently that people are often a part of a season of your life. A great example of this is coworkers; you may be close while you work together, but once one of you leaves, and you’re no longer speaking every weekday, friendship often tends to leave as well.

It’s a different season.

To make it twenty years with anyone — even family — is an immense accomplishment. It requires choosing each other often. In a romantic relationship, it requires choosing each other every time. Even if you don’t particularly want to, at that particular moment. When it’s easy, but also when it’s hard. Especially when it is hard.

What’s been so great about spending twenty years with Erin is that it is very rarely hard. Well, for me anyway. 🫣

Our twenty year wedding anniversary isn’t until a couple summers from now. To many couples, that is the anniversary that matters most. To me, while our wedding was immensely important, it was a formal codification of something we already knew. Something we had known for two and a half years: we are each other’s person.

We’ve known that since 2005. Since the sixteenth of January, to be exact.

Here’s to twenty years, Erin. To merging two lives into one. To bringing two all-new lives into the world. To trying, every day. To the [overwhelming majority of] times where it doesn’t feel like trying at all. To choosing each other… every day.

I love you. Happy anniversary. 🥰