Last week, I joined Leander Kahney, Lewis Wallace, and D. Griffin Jones on last week’s Cult of Mac Podcast. On the episode, we discussed some news from the week, I did a [mostly successful] walkthrough of Callsheet, and then we played a little prediction game that Griffin put together for this week’s announcements.

In an unusual turn of events for me, we did the whole episode on video, so you can also watch on YouTube.

I had a lot of fun on the show, and even ended up steering us into having an after-show, which is a Cult of Mac Podcast first. (It was 🎵 accidental 🎵.)

Passwords are dumb.

I’m sorry, Ricky, but this isn’t a post about passkeys.

Instead, it’s a post about OpenID Connect, and more specifically,

tsidp. But, much like a recipe found online, we’ll get there.

Over the last several years, I’ve recently become more and more of a “homelabber”. Your definition may vary, but to me, it means that I’m running more and more server or server-like applications and devices in my house.

Over the last couple weeks, I’ve made some significant changes to the hardware in my homelab, which has encouraged me to reevaluate some of the software choices I’ve made as well.

I may talk about this more here, and will certainly talk about it on ATP at some point, but the short-short is that I “repatriated” a NUC that I had stationed at a friend’s in Connecticut so that I could slurp up TV to watch Giants games. Once it was back in the house, I installed Proxmox (new to me!) and… things snowballed fast.

Last night, I was somehow reminded of a video by my friend Alex. In it, Alex explains that, for Tailscale users like myself, you’ve already established who you are, as long as you’re logged into your tailnet. Why not leverage that known identity for authorization?[1]

Enter tsidp. This is a very small and straightforward OIDC

identity provider. Said differently, your applications in your

homelab can ask “who is this person?”, and tsidp can answer.

At that point, that account’s permissions are controlled within

the app in question.

I started down this road with Proxmox, which was very straightforward, since Alex covered specifically that in his video. I followed Proxmox with trying to figure out where else I could leverage OIDC, and found that Portainer supports it as well. As does Paperless-ngx. Both of these were a touch squishy to get configured properly, so I thought I’d share how I got them working here.

I’ll assume you can handle the tsidp side on your own; I’m

concentrating on just the configuration on this side for this post.

Portainer

Let’s assume that your Tailnet name is smiley-tiger.ts.net, and

you’ve set up tsidp at idp.smiley-tiger.ts.net. Naturally, you’ll

need to change these for the particulars of your install/tailnet.

In Settings → Authentication:

- Select

OAuthas yourAuthentication method - Turn on

Use SSO. I recommend leavingHide internal authentication promptoff, for safety’s sake - Leave defaults until you get to the

Provider, where you’ll selectCustom. - For the OAuth Configuration, use the following. For URLS, note that trailing slashes are not used:

- Client ID and Client secret from

tsidp - Authorization URL

https://idp.smiley-tiger.ts.net/authorize - Access token URL

https://idp.smiley-tiger.ts.net/token - Resource URL

https://idp.smiley-tiger.ts.net/userinfo - Redirect URL

Your Portainer URL, such ashttps://portainer.smiley-tiger.ts.net - User identifier

preferred_username - Scopes

email openid profile

- Client ID and Client secret from

After you set all those settings, you should be able to connect with

tsidp by simply tapping the large Login with OAuth button.

Note that, as usual, you’ll likely need to bless the account you just created with privileges using your previous, internally authorized, account.

Paperless-ngx

This one was quite a bit less straightforward. Previously unbeknownst to me, Paperless runs on Django, so the configuration for OIDC is basically pulled from there.

To configure this in Paperless, you need to set some environment

variables. I’m using Docker Compose, so in my environment

clause, I added the following:

environment:

PAPERLESS_SOCIALACCOUNT_PROVIDERS: >

{

"openid_connect": {

"APPS": [

{

"provider_id": "tailscale",

"name": "Tailscale",

"client_id": "{{YOUR_CLIENT_ID}}",

"secret": "{{YOUR_SECRET}}",

"settings": {

"server_url": "https://idp.smiley-tiger.ts.net"

}

}

]

}

}

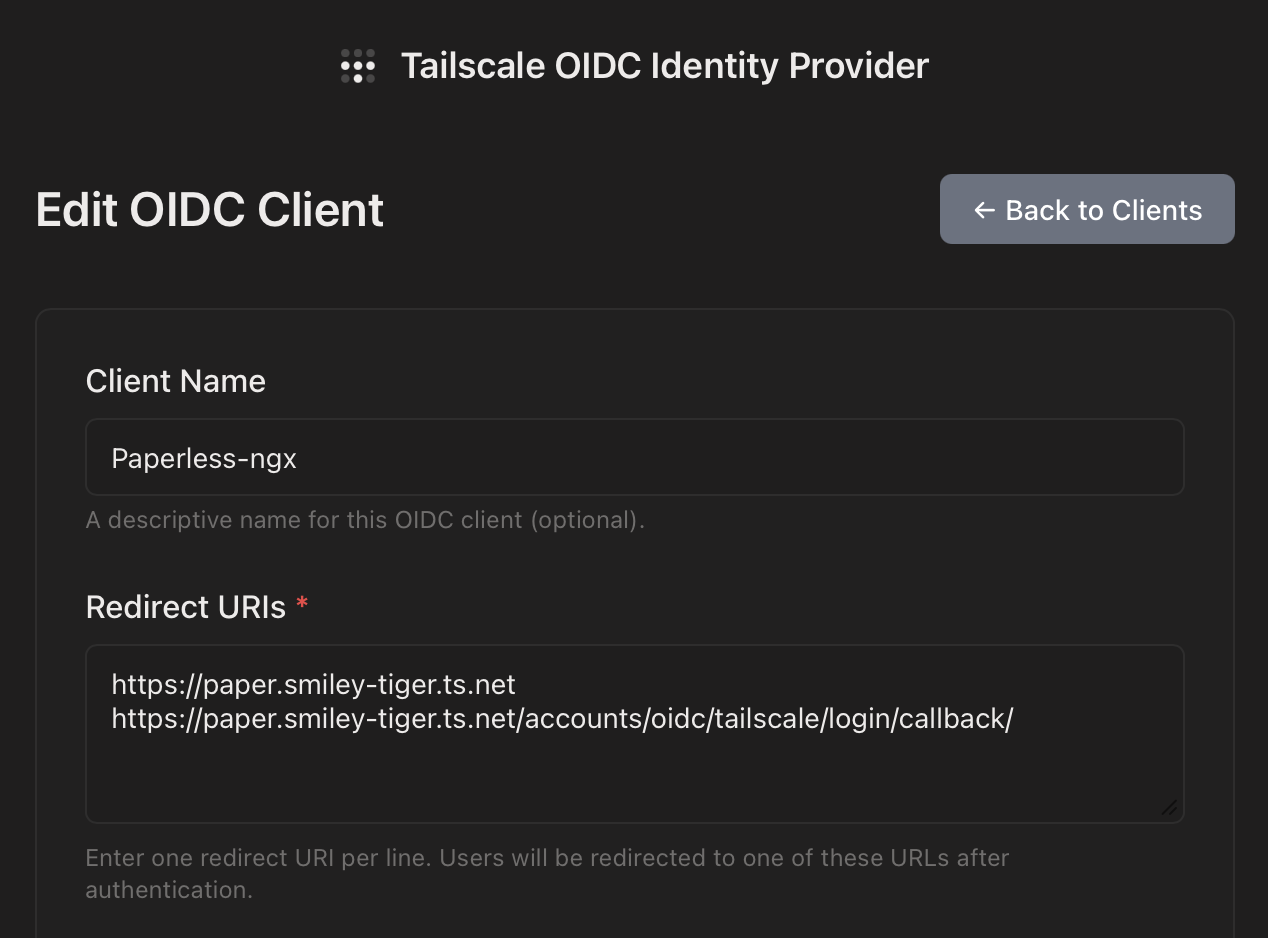

After doing so, and setting things up in tsidp as I had before,

I was faced with errors on the tsidp side, about a mismatch

callback URL. After much trial and error, I discovered I needed

to add another valid URL to tsidp. This is how I ended up:

The key here was the second URL:

https://paper.smiley-tiger.ts.net/accounts/oidc/tailscale/login/callback/

Note a couple things about this URL:

- The

tailscaleyou see there is theprovider_idyou specified in the environment variable above - You actually do need the trailing slash in this case

Once I had that set, I was able to log in. As with Proxmox and Portainer, I then had to bless that new user with Administrative privileges, and then I was off to the races.

The Future

At this point, I think I’ve enabled OIDC for everything I can. There’s a suite of other apps I’d love to use OIDC for, but apparently it’s not supported yet. (IYKYK)

That said, I can now log into Proxmox, Portainer, and Paperless-ngx — all the P’s! — without having to enter a password. And that’s pretty rad.

I’m no security professional, and it wouldn’t surprise me if I’m getting these terms slightly wrong. Just roll with me here. ↩

Since becoming afflicted — becoming a Home Assistant user — I’ve shown the same symptoms as this illness always does: I want to automate everything.

My home automation journey started during the pandemic, with me wanting to have a LED light that illuminated in our bedroom when the garage door was open. This involved a Rube Goldberg contraption involving two Raspberry Pis, a contact sensor, and some custom Python. After the project was over, I had a LED light attached to our headboard that would only illuminate when the garage door was open. It’s saved us from leaving the door open overnight a handful of times.[1]

In the ensuing years, I have wanted to find a similar, but slightly more general-purpose solution. There are some other state-of-the-house things I’d like to monitor, and I’d like to do so in a more central place in the house. Given our house was built in the late 90s, we have RJ11 coming out of one of the walls in the kitchen at nearly eye level. I had tried to convince Erin to let me repurpose that — my vision was to drill a trio of LEDs into a blank faceplate, stick something behind it, and call it a day.

Unfortunately, Erin has taste, and gently led me to the conclusion this would be… a bit of an eyesore. So I had to re-evaluate.

Around this time, I was lamenting this problem on ATP, and a listener pointed me to these now-discontinued HomeSeer switches. At a glance, this is a standard Decora-style dimmer that can be controlled via ZWave. However, upon closer inspection, there are seven LEDs on the left-hand side. Even more interestingly, you can put the switch in “status mode”, which means each of the LEDs can be individually controlled.

And just to put icing on the cake, the listener had an extra that they were willing to send me. Thank you, again, Chris R. 🥰

Once it arrived, I had to teach myself ZWave, and figure out how to get the Homeseer into “status mode”. However, once I did, it was pretty quick and astonishingly easy to get Home Assistant talking to not only the dimmer, but the LEDs as well.

So, here’s where I ended up:

The image is under-exposed on purpose, and the colors are a touch off in this photo, but I assure you they’re vivid but not attention-grabbing when seen in person.

The center switch actually controls the lights above our kitchen table. But for me, it’s the home status board. Here’s what each of the lights indicates, from top to bottom:

| LED Color | Purpose |

|---|---|

| None | Not currently used |

| Green | The laundry needs attention |

| Blue | The mail is waiting |

| None | Not currently used |

| White | The Volvo is charging |

| Magenta | The shed door is open |

| Red | The garage door is open |

The steady/all-good state of the status board is for all of the LEDs to be extinguished. If any of them is on, it doesn’t necessarily mean that something needs attention, but it may.

The green LED will flash while the washer or dryer are running, and remain lit when clothes need to be moved.[2]

The blue LED will illuminate if the mailbox has been opened for the first time in the day, and if that opening happened after 10 AM. We almost always place outgoing mail in the mailbox first-thing in the morning, and the mail almost never comes before dinnertime. A LoRa contact sensor is what’s feeding this.

The white LED will illuminate as long as Erin’s plug-in hybrid is actively charging. Home Assistant has an integration for Volvo.

The magenta LED will illuminate if the shed door is open. Here again, another LoRa contact sensor.

The red LED will illuminate when the garage door is open, and it will flash while it is raising or lowering. Home Assistant has an integration for our weird garage door opener.

This status board is, for any reasonable human, lunacy. None of this is particularly necessary, and I could make a strong argument that most of it isn’t particularly helpful. For example, the distance between the red “the garage is open” LED and the doorway to the garage is approximately three paces.

That said, I have come to quite like having it in the kitchen, where I naturally pass by it many times daily, to be able to see the state of some critical systems in the house. The kids have, slowly, started to learn what LED means what.

Erin could not possibly care less. She’s just happy not to have the eyesore I originally proposed.

Over the years, the way the LED works has changed. It’s still powered by a Raspberry Pi, but instead of some bespoke communication over UDP, it listens for a topic on my local MQTT server, and will illuminate based on that. Currently, Home Assistant will tell it to illuminate if the shed or the garage are open. ↩

We have a natural gas dryer, and thus it uses a standard 110V plug. I put the washer and dryer on their own Shelly plugs, and Home Assistant monitors their power usage. When the washer’s power draw falls below a few hundred watts for a full minute, it is assumed the clothes are waiting to be moved to the dryer.

When the dryer starts drawing a few hundred watts, it is assumed the clothes are drying.

When the dryer is drawing a low wattage, it’s assumed the clothes have been removed. The low wattage is because it’s powering its internal light.

This system isn’t 100% perfect, but it’s been surprisingly accurate. ↩

A few years ago, AT&T

sunset

sunset our then-current plan, and aggressively forced us to switch to a different,

more modern plan. Naturally, that more modern plan just happened to be

quite a lot more money.

our then-current plan, and aggressively forced us to switch to a different,

more modern plan. Naturally, that more modern plan just happened to be

quite a lot more money.

🙄

We figured we could save money by pairing our Internet & TV service with our mobile service. We switched from AT&T → Verizon. At the time, we were saving a ton of money. In fact, since we didn’t get new phones as part of the deal, we got something like $1000 in credits that we used to pay for service for the better part of a year.

Over the years, the cost of both our home and mobile plans have ratcheted up, to the point that we’re now paying ~$135/mo (❗️) for Internet and TV; we’re paying roughly $185/mo (‼️) for two phones and an iPad. The bills are too damn high.

A few listeners — apropos of me seeking a pay-as-you-go wireless hotspot[1] — pointed me to US Mobile. They are a MVNO that actually works with all three major networks[2]. Allegedly they’re easy to work with, which is to say they have a modern and easily navigable website.

More importantly, however, they offer darn near all the same stuff as the big three do, but for way less money. Their most expensive plan, before add-ons, is $32.50 per month.

I decided I was going to give them a shot, because I should be able to save

at least $30/month by switching.[3] So, I went to Verizon’s website, and

tried to find the place where I could start the number porting process. I

couldn’t find it quickly, so I searched for port number.

And I’ll be damned, but once I saw the results of my search, right there at the top, just below the search box, was an offer to save $20 per month per line. Just because.

So I clicked it. It didn’t work, because Verizon’s website is trash, but it did offer a link to a place I hadn’t previously found, where I had several offers. All of them had catches of some sort — get a new phone for free and commit to a new two-year agreement and so on.

But sure enough, there was a $20/mo/line discount offered. I clicked on it, verified I wanted it on both Erin’s and my lines, and that was that. In theory, our bill next month will be $145, rather than $185.

So, if you’re out of a long-term contract with your carrier, and are just riding month-to-month, it may be worth trying the same thing.

I get that it’s not exactly in Verizon’s best interests to give me the best deal they possibly can. I get that they’re a business designed to extract money from my wallet, so they can place it in theirs. But still, this felt gross. It was only when they [rightly!] detected they were in the midst of a potential case of churn that they suddenly found a way to lower my bill.

I hate everything, at the moment. But right now I really hate capitalism.

Well, and ICE. Screw those lawless chodes.

USMobile doesn’t really fit the bill here, but if you have any tips, I’m all ears. Simo seems to be exactly what I’m seeking, but I’ve heard both good and bad. ↩

Interestingly, with some restrictions/cost depending on your particular plan, you can swap between networks. Suppose when you sign up, you’re on Verizon, but you later move and AT&T has better coverage, you can do that. All while staying a USMobile customer. Or, for a monthly fee, have your phone ride on two networks, for better coverage. ↩

Our Verizon situation comes with discounts for having all three services with them. We also currently get “free” Disney+ and ESPN+, which would need to be paid for directly in the future. Additionally, we pay $10/mo for the cellular iPad, and that would need to be accounted for as well. ↩

The last couple weeks have been really busy, so I didn’t get a chance to post about my appearance on Downstream. As discussed on ATP, it sounds like my time with a CableCARD-powered HDHomeRun is likely coming to an end.

On this episode of Downstream, Jason and I talked a bit about Callsheet, and then he helped guide me through the process of figuring out what comes after Verizon shifts to IPTV and retires their CableCARDs. It was, selfishly, extremely useful; however, I think it also serves as a good model for figuring out which of the myriad of TV streaming services is the best fit for you.

This week, I joined my friends Dan, Mikah, and Rosemary on Clockwise.

On this week’s episode, we discussed robot vacuums growing limbs, technology for starting the new year right, the technology we covet but can’t justify, and discontinued tech products we still employ day-to-day.

In a rare bit of website follow-up, I wanted to call your attention to this episode of The Good News Podcast. I really love this show because it’s extremely short — generally less than five minutes — and it’s always about something happy.

About a week ago, their story was on the aforementioned “trashed panda”.

The Good News Podcast is always worth your [small amount of] time, but this one was particularly delightful for me.

My hometown of Richmond, Virginia has made national news for a couple of fun, feel-good stories. I thought I’d share both.

First, and more recently, a racoon stumbled into a local ABC (liquor) Store, and appeared to get loaded and then pass out in the bathroom. This news — probably in no small part due to the amazing photograph — actually made international news, as even the BBC got wind of it.

This quote really ticked me:

[Animal Control] Officer Martin said the animal had fallen through one of the ceiling tiles before going “on a full-blown rampage, drinking everything”.

#goals, amirite?

Anyway, this was lampooned on Saturday Night Live this past week:

Earlier this year, a tragedy happened. A local Lowe’s had a stray cat come into their store years ago, and took up residence. This happened years ago, and Francine the cat has become, to a degree, a bit of a local celebrity.

Tragedy struck a couple months back, however, when Francine went missing.

The story, at the time, was long and harrowing. The short-short is that she meandered onto an 18-wheeler that was returning to a shipping hub in neighboring North Carolina. After a surprisingly extensive search, Francine was eventually located, and returned home… to Lowe’s.

The story was covered on CBS Sunday Morning this week:

Richmond is a small city, and it’s rare anyone thinks about us at all. To have two national/international stories land in a week is quite unusual. I’m glad a little more attention is being paid to the place I call home. 😊

Periodically, my friends at Mac Power Users, David and Stephen, take the pulse of the Apple developer community. This year, I was lucky enough to be asked to join the MPU Developer Roundtable 2025, along with my friends Charlie and James.

Our conversation was varied, and I like to think, quite interesting. We discussed Liquid Glass, SwiftUI, documentation, subscription, Apple Intelligence, and more.

I love these episodes, as they get to be a bit of a time capsule, chronicling the state of the world at a particular moment in time. It was a great honor to asked to participate.

This week I joined my pals Dan, Mikah, and Joe Rosensteel on Clockwise.

On this episode, we discussed Apple’s brand-new M5, how we’re using AI locally — if at all — and media that’s brought us joy lately (for me, The Murderbot Diaries). Finally, we decided to take it upon ourselves to fix some of Apple’s bonkers product names.

For someone that is used to two-hour podcast episodes, Clockwise is a fun change of pace. If you haven’t dipped your toe in recently, you should!