The beginnings were… odd.

“What is it that makes somebody buy a white car?”

“Oh, you’re a 🍆.”

Perhaps it’s fitting — given my predilection for… coarse language… that this was my podcasting debut. Me calling Marco a… ahem… “jerk”.

Neutral began in January, but by March, we knew that the car show had mostly run its course. However, what began as an after-show on Neutral had come into its own.

Ten years ago today, we formally launched the Accidental Tech Podcast. A show borne out of our idle chatter after our “real” podcast.

Ten. Years. Ago. Today.

A full decade.

When we launched ATP, I was working as a .NET developer at a local consulting firm. John was just a few years into a job he would, years later, end up leaving to go independent. Marco hadn’t yet announced Overcast.

I had no children. Marco’s son wasn’t even a year old. John’s youngest was barely out of preschool.

Now, I have two children; the youngest of which enters Kindergarten next year. Marco’s son is soon to be in middle school. John’s youngest is not too far from college; his eldest is already there.

A lot of time has passed. All during the Accidental Tech Podcast.

The start of ATP is somewhat nebulous — was it when Marco released us goofing off on Soundcloud? Perhaps, but for me, I always felt this was the start of ATP:

Ten years ago today.

I am indescribably lucky to be able to talk to my friends — including Myke as well — and earn money for doing so. I take the work very seriously — and sometimes it really is work. But more often than not, even if it’s work, it’s also tremendous fun.

It’s thanks to our listeners, and probably you, reading these words, that we are able to sell ads and memberships and make a living doing something we love.

Thank you, so very much, for making these last ten years possible. Here’s hoping the end is nowhere in sight… 🤞🏻

I’ve listened to Upgrade since episode one. It’s one of my favorite tech podcasts, and honestly, one of my favorite podcasts, period. Myke and Jason do a phenomenal job of regularly re-inventing the show, keeping it extremely fresh even after nine years.

This week, I filled in for Jason while he was on a well-deserved vacation. Since Myke and I regularly record Analog(ue) together, I was curious what kind of rhythm we’d fall into for the episode. I’m really pleased how it turned out, and I think Myke and I both did a pretty good job.

On the episode, we discussed Apple’s “moonshot” team, new iPhones, the Mac Pro (yes, really.), and played a new game together. Myke also asked me a bunch of listener questions, which was extremely fun.

I like to think it was a good one.

Another good one you should check out is the episode prior, when my dear friend Dave filled in for Jason. That one was unquestionably excellent.

This week I joined my pals Bryan Guffey, Dan Moren, and Mikah Sargent on Clockwise.

On today’s episode, we discussed how modern-day internet search is both impressive and disappointing, what we’re doing with AI tools, the ethics behind AI voice generation, and our favorite Black technologists.

We ran nearly an hour on this recording, which left quite a challenging edit for poor Dan this week, as Clockwise is 30 minutes or less. 😬

It’s been said that the whole of iOS development is turning JSON into

UITableViews. There’s probably more truth to that than I care to

admit.

Often times, especially when working on an app as part of a company’s in-house team, an iOS developer can work with the server/API team to come to an agreement what JSON will be used. Sometimes, especially when working as an independent developer, the API is “foreign”, and thus the JSON it emits is outside of an iOS developer’s control.

Occasionally, APIs will make choices for their JSON structures that make perfect sense in more loosely-typed languages, but for strongly-typed languages like Swift, these choices can be more challenging. The prime example of this is JSON’s heterogeneous arrays.

In JSON, it is completely valid to have an array of objects that are not alike.

An extremely simple example could be [1, "two", 3.0]. In more typical examples,

these arrays won’t hold primitives but rather objects, and each object in these

heterogeneous arrays will typically have vastly different key/value pairs. It’s

easy to store heterogeneous arrays in Swift as an array of Dictionaries, but that…

isn’t very Swifty.

What’s the more Swifty version, then? Preferably using Decodable?

This post is my attempt to describe exactly that.

Let’s assume you’re hitting some web API that describes a restaurant. It will

return a restaurant’s name, as well as its menu, which is a heterogeneous

array of objects that represent drinks, appetizers, and entrees.

Let’s further assume that the developers of this API made some annoying choices

about how to name things, such that there’s no clear and easy way to make a

base protocol that all the menu items can inherit from. 😑

So, some example JSON may look like this:

{

"name": "Casey's Corner",

"menu": [

{

"itemType": "drink",

"drinkName": "Dry Vodka Martini"

},

{

"itemType": "drink",

"drinkName": "Jack-and-Diet"

},

{

"itemType": "appetizer",

"appName": "Nachos"

},

{

"itemType": "entree",

"entreeName": "Steak",

"temperature": "Medium Rare"

},

{

"itemType": "entree",

"entreeName": "Caesar Salad"

},

{

"itemType": "entree",

"entreeName": "Grilled Salmon"

}

]

}

The restaurant, Casey's Corner, serves two drinks, a Dry Vodka Martini and

a Jack-and-Diet. It serves one appetizer, Nachos. It serves three entrees,

Steak (which has an associated temperature), Caesar Salad, and Grilled Salmon.

The type of each menu item is defined as part of the menu item itself, using the

itemType key. Note that we don’t particularly care about this key in our Swift

objects, as their type will implicitly give us this information. We don’t want to

clutter our plain old Swift objects with an itemType property.

How can we represent this in Swift? Most of this is straightforward:

struct Drink: Decodable {

let drinkName: String

}

struct Appetizer: Decodable {

let appName: String

}

struct Entree: Decodable {

let entreeName: String

let temperature: String?

}

struct Restaurant: Decodable {

let name: String

let menu: [Any]

}

Note that the menu property is Array<Any>. Again, in most examples, you’d

probably be able to avoid this, and figure out some sort of common base type

instead. I wanted to have a clear, bare-bones example that shows how to do this

all by hand, no base types allowed.

For Drink, Appetizer, and Entree, we can rely on the automatically

synthesized Decodable implementations: no further work required.

Restaurant is a whole different story, however.

The thing is, we need to be able to peek into the menu JSON array in order to

see itemType for each menu item, but then we need to back up and actually decode

each menu item. This gets wonky fast.

Here’s how I did it:

struct Restaurant: Decodable {

let name: String

let menu: [Any]

// The normal, expected CodingKey definition for this type

enum RestaurantKeys: CodingKey {

case name

case menu

}

// The key we use to decode each menu item's type

enum MenuItemTypeKey: CodingKey {

case itemType

}

// The enumeration that actually matches menu item types;

// note this is **not** a CodingKey

enum MenuItemType: String, Decodable {

case drink

case appetizer

case entree

}

init(from decoder: Decoder) throws {

// Get the decoder for the top-level object

let container = try decoder.container(keyedBy: RestaurantKeys.self)

// Decode the easy stuff: the restaurant's name

self.name = try container.decode(String.self, forKey: .name)

// Create a place to store our menu

var inProgressMenu: [Any] = []

// Get a copy of the array for the purposes of reading the type

var arrayForType = try container.nestedUnkeyedContainer(forKey: .menu)

// Make a copy of this for reading the actual menu items.

var array = arrayForType

// Start reading the menu array

while !arrayForType.isAtEnd {

// Get the object that represents this menu item

let menuItem = try arrayForType.nestedContainer(keyedBy: MenuItemTypeKey.self)

// Get the type from this menu item

let type = try menuItem.decode(MenuItemType.self, forKey: .itemType)

// Based on the type, create the appropriate menu item

// Note we're switching to using `array` rather than `arrayForType`

// because we need our place in the JSON to be back before we started

// reading this menu item.

switch type {

case .drink:

let drink = try array.decode(Drink.self)

inProgressMenu.append(drink)

case .appetizer:

let appetizer = try array.decode(Appetizer.self)

inProgressMenu.append(appetizer)

case .entree:

let entree = try array.decode(Entree.self)

inProgressMenu.append(entree)

}

}

// Set our menu

self.menu = inProgressMenu

}

}

The trick here is just before, and within, the while loop. Like we realized

above, we need to be able to peek into each object in the menu array.

However, in doing so, we advance the position of the arrayForType container,

which means we can’t back up and grab the whole object for parsing into a

Swift object.

Thus, we make a copy of arrayForType container, called array, which we

use to decode full objects. When the arrayForType container advances, while

we decode itemType, array does not advance. This way, when we try to

decode() our Drink, Appetizer, or Entree, arrayForType has moved

on, but array has not.

I’ve put all this in a gist on GitHub. I’m sure that other Swift developers will have thoughts on my approach here; if you’re that person, please feel free to either leave a comment on the gist, or fork it.

ATP has had some fun things going on lately, and I wanted to call attention to them.

First, and in my perspective most importantly, I’ve been podcasting with my dear friends Marco and John for a decade‼️ The first episode of Neutral debuted on Thursday, 17 January, 2013. I’m incredibly thankful that this little adventure I started on has changed from a goof, to a side hustle, to a profession.

A decade. My word.

In mid-2020, ATP launched a membership program. At the time, we offered an ad-free feed, and a small discount on our time-limited merchandise sales. A little while later, we added “bootlegs” — raw releases of our recordings moments after we hit “stop”.

The membership program has been going well, and I’m extremely thankful to anyone who has joined. 💙

Late last year, we decided to do some one-off, members-only episodes. We really wanted to do right by all our listeners, so these episodes are additive — they don’t involve any of the usual ATP subject matter, and if you skip them, it shouldn’t in any way hurt your enjoyment of the normal show.

To start off, we started “ATP Movie Club” by each of us choosing a movie for the other two hosts to watch:

- Marco chose My Cousin Vinny, which I hadn’t seen.

- I chose The Rundown, which neither John nor Marco had seen

- John chose The Edge of Tomorrow, which neither Marco nor I had seen

When deliberating what to choose for my movie, I decided to choose something that neither John nor Marco had seen. Both John and Marco expected me to choose The Hunt for Red October, but I really wanted a movie neither of them had seen; John had seen Hunt before.

This week, we righted that wrong went back and covered The Hunt for Red October.

While the whole discussion was tremendous fun, we immediately got derailed

arguing about the best way to make popcorn. As it tends to be, when the

three of us get into it with each other, that makes for some of the most fun listening.

None of the above should be a surprise to regular ATP listeners, but I wanted to call attention to it here on my website, too.

We’ll probably do some ATP Movie Club episodes again in the future, but as I write this, we plan to put that aside for at least a little while. We have a tentative plan for something new for our next members-only episode, but we’ll see what shakes out, and when it happens. No promises.

If you have an idea of something fun for the three of us to do, please feel free to reach out to all of us or just to me. We’d love some ideas or inspiration for things to do in the future!

And if you’re not already a member, I’d love for you to check it out.

Late last year, I got a Sonos setup for my living room. I chose the Premium Immersive Set, which includes:

- Arc Soundbar

- 2 × One SL rear surrounds

- Their third-generation Subwoofer

I cannot overstate how great this system sounds. Surely better can be had, but Sonos makes everything so simple. Furthermore, their ability to pipe audio from anywhere to anywhere makes AirPlay 2 look positively barbaric by comparison. Truly. I say this as a person who makes a living talking about how much I love Apple.

Sonos has a couple of really useful features when you’re watching television.

- Night Sound: At lower volumes, quiet sounds will be enhanced and loud sounds will be suppressed

- Speech Enhancement: Increases the volume of speech

These features are super great for nighttime viewing, particularly when your living room is directly below your son’s bedroom. Together, the Sonos will tone down the loud booms that are most likely to wake him up, but also bring up the relative volume of speech, so we don’t need to rely on subtitles.

As great as this is, I never remember to turn them off; instead, I attempt to watch TV the following day — often to watch a guided workout with music behind it — and wonder why everything sounds so wonky. Though it only takes a couple seconds to pop open the app and turn off Night Sound & Speech Enhancement, it gets old when you do it every day. There must be a way to automate switching these off, right?

One of the great things about being a nerd developer is that you can pretty

quickly put together a few tools to make something happen. Last night, I figured

I’d start digging into the Sonos API and see if I could whip something up.

Thankfully, after only a few minutes down that path, I realized there must be

something futher up the stack that I could use.

Enter SoCo-CLI, which is a command-line front-end to SoCo.

SoCo-CLI allows you to do a stunning amount of things with your Sonos

system via the command-line.

For me, I needed to do two things, both of which are easily accomplished using

SoCo-CLI. Note that my system is called Living Room, but SoCo-CLI is

smart enough to match my speaker name by prefix only — Liv is

automatically parsed to mean Living Room. With that in mind:

I needed to turn off speech enhancement:

sonos Liv dialogue_mode off

I needed to turn off night sound:

sonos Liv night off

I can also combine these into one command in SoCo-CLI:

sonos Liv dialogue_mode off : Liv night off

Now I just needed to run this overnight every night. I turned to my trusty Raspberry Pi, which is in my house and always on. I added a new line to my crontab:

0 3 * * * /home/pi/.local/bin/sonos Liv dialogue_mode off : Liv night off

Now, every night at 3 AM, my Raspberry Pi will ask the Sonos to turn off both night sound & speech enhancement. I never have to worry about remembering again, and my workouts in the morning will no longer sound off.

🤘🏻

Today I joined Allison Sheridan on her podcast Chit Chat Across the Pond. On this epsiode, we discuss our many and varied home automations. Many of which — particularly for me — are utterly bananas. Most especially, my oft-derided-but-darnit-it-works-for-me garage door opener notification system.

I really really enjoy using Shortcuts, but I feel like I’m a complete novice. Discussing Shortcuts — and automations in general — gives me tons of inspiration for myself. Allison provided plenty of those examples.

This episode comes in well under an hour, but it packs a lot into not a lot of time.

Every podcast is, to some degree, a pet project. I’ve hosted some, and guested on many. This guest appearance was something else entirely.

Martin Feld is a PhD student at the University of Wollongong, and is doing his thesis on podcasting, RSS, and more. As part of his thesis, he’s hosting a podcast, which he cleverly named Really Specific Stories.

Really Specific Stories is an interview show, where Martin deftly asks his guests about their origin stories, how they got into podcasting, their relationship with the open internet, and more.

On this episode, Martin and I walked through my path into podcasting, starting all the way — and I’m not kidding — from when I was a toddler. It was a fun discussion, and I’m honored that Martin asked me to be a part of the project.

I’m also in the company of a ton of amazing guests; do check out the other episodes as well.

Despite being willing to go to my grave defending °F for the use of ambient air temperatures, America gets basically all other units wrong. Inches/miles, pounds, miles per hour, etc. All silly. We should be all-in on metric… except °C. I don’t care how air feels outside; I care how I feel outside.

Anyway.

Americans will generally write dates in MM/dd/yy format; as I write this, today

is 11/14/22. This is dumb. We go broad measure → specific measure → vague measure.

The way Europeans do it — dd/MM/yy makes far more sense. You go on a

journey from the most-specific to the most-vague: day → month → year.

For general-purpose use, I prefer the European way; today is 14/11/22.

Yes, I know that 8601 is a thing, and if I’m using dates as part of a

filename, I’ll switch to 2022-11-14. Same with database tables, etc. But in

most day-to-day use cases, the year is contextually obvious, and it’s the

current year. So, for the purposes of macOS’s user interface, I set it to

dd/MM/yy.

Prior to Ventura, you could go into System Preferences, move little

bubbles around, and make completely custom date/time formats. With the switch

from System Preferences to System Settings, that screen was cut. So,

there’s no user interface for making custom formats anymore.

I asked about this on Twitter, and got a couple of pretty good answers. One, from Alex Griffiths, pointed me to a useful and helpful thread at Apple. The other, from Andreas Hartl, pointed me to another useful and helpful thread at Reddit. Between the two of them, I was able to accomplish what I wanted, via the command line.

Apple has four “uses” that roughly correspond to the length of the date string

when it’s presented. As expected, they use ICU format characters to

define the strings and store them. They’re stored in a dictionary in a

plist that’s buried in ~/Library, but using the command line it’s

easy to modify it; we’ll get to that in a moment.

Here’s where I landed so far:

| Use | Key | Format | Example |

|---|---|---|---|

| Short | 1 | dd/MM/yy | 14/11/22 |

| Medium | 2 | dd/MM/y | 14/11/2022 |

| Long | 3 | dd MMM y | 14 Nov 2022 |

| Full | 4 | EEEE, dd MMMM y | Monday, 14 November 2022 |

In order to store these in the appropriate plist, you need to issue

four commands. Each has the same format:

defaults write -g AppleICUDateFormatStrings -dict-add {Key} "{Format}"

For example:

defaults write -g AppleICUDateFormatStrings -dict-add 1 "dd/MM/yy"

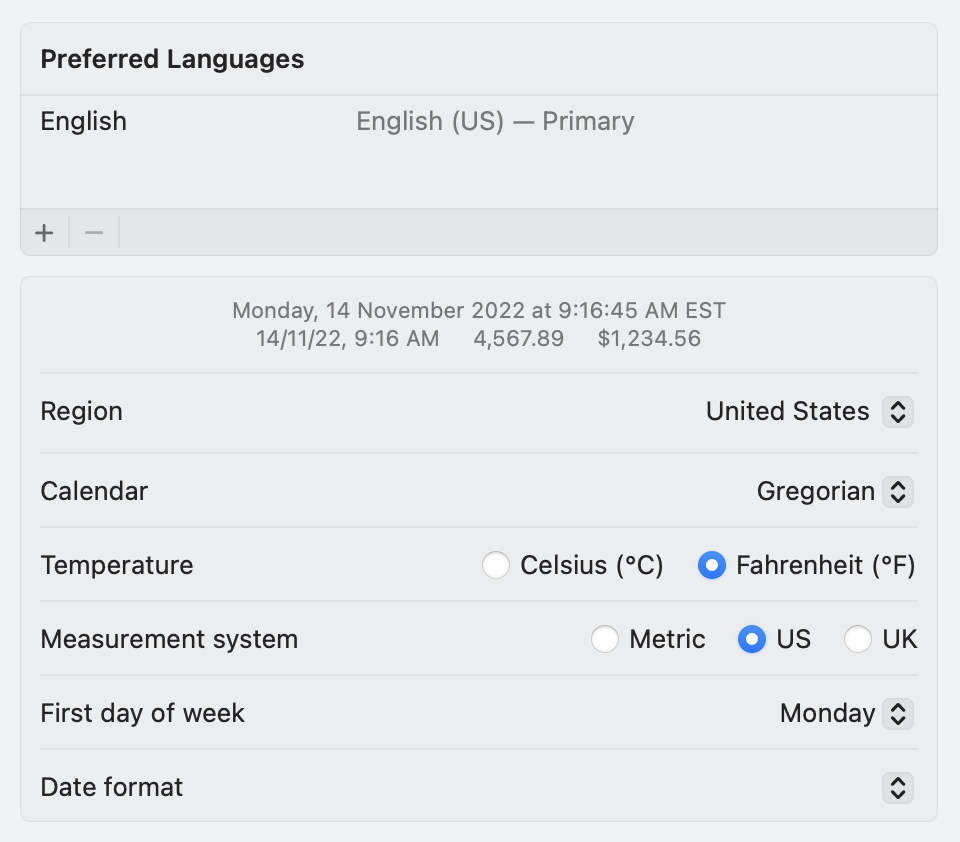

Once you complete this, you can go into System Settings → General →

Language & Region. In there, you’ll note the Date Format drop-down

appears to be blank, but the non-interactive sample should show what you’d

expect:

I hope Apple brings back the user interface for this, but at least there’s a way to hack around it.

By happenstance, I feel like I’ve been on a new-dad tour lately. Last month, I spoke with Max shortly before he became a Dad. Today, I was the guest on, believe it or not, iPad Pros podcast. We spoke about iPads a tiny bit, but instead, mostly discussed what it’s like to be a new Dad. Tim, the host of the show, became a Dad just a short time before we recorded; this was a surprise to both of us, as the thought was to have this conversation before baby showed up. Sometimes life has other plans. 😊

I am far from a perfect Dad; I can name a thousand ways in which I need to do better. However, I like to think I am honest with what I get right, and also what I get wrong. Particularly on Analog(ue), but also in other avenues like this website, I try to truthful and not sugar-coat.

To have both Tim and Max ask me to join them on their bespoke “Dad” episodes is a tremendous honor. It’s also funny that this coming Saturday is the eight-year anniversary of me being a Dad in the first place. 😄